Information theory is rooted in the work of Claude Shannon, who is one of the most influential scientists of the 20th century. He is considered to be the father of the digital age. In his landmark paper published in 1948, he developed an elegant theory called information theory, which introduced the modern concept of information and provided guidelines on how to efficiently acquire, compress, store and transmit information. Just as how Newton’s and Einstein’s theories shaped our understanding of the physical world, Shannon’s information theory has shaped our understanding of the digital world.

This fascinating video made by University of California Television explores Claude Shannon’s life and the major influence his work had on today’s digital world through interviews with his friends and colleagues.

Information Theory (Coding theorems)

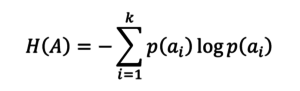

For noiseless channels (source encoding)

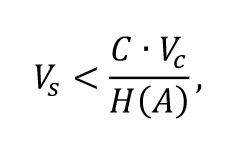

For noisy channels.

Information theory in Post-Shannon period

Below is a downloadable PowerPoint presentation presented by Michelle Effros and Vince Poor for the Shannon Centenary in 2016.